Github Pytorch Stacked Fan (Feature Aggregation Network) offers a powerful approach to various computer vision tasks. This architecture, leveraging the accessibility of PyTorch on GitHub, allows developers to easily implement and experiment with stacked FAN models for improved performance in areas like object detection and image classification.

Understanding the Stacked FAN Architecture

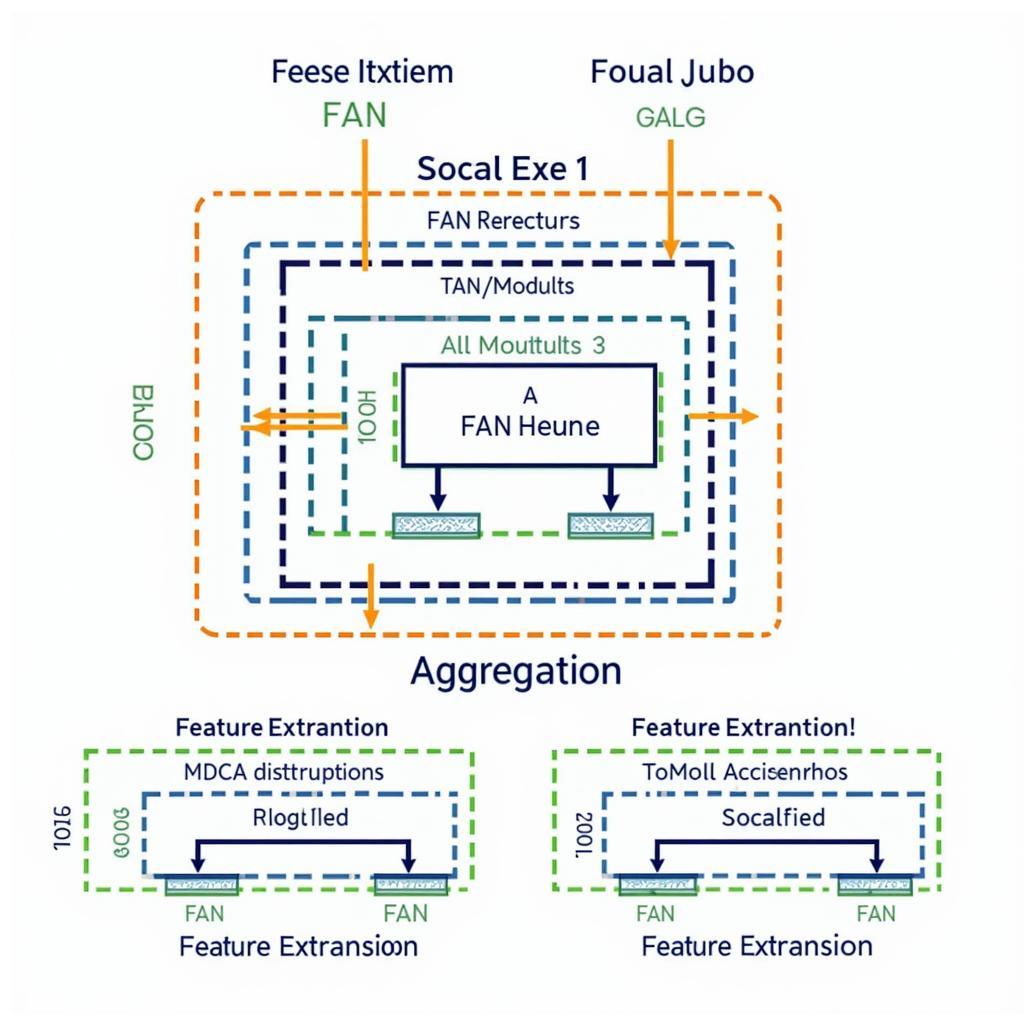

Stacked FAN models, as the name suggests, involve stacking multiple FAN modules. Each module consists of independent feature extraction branches that process the input data at different scales. These features are then aggregated to provide a more comprehensive representation. This approach helps capture both global and local context, leading to more robust and accurate predictions.

Why Choose Stacked FAN with PyTorch on GitHub?

PyTorch’s flexibility and ease of use, combined with the open-source nature of GitHub, make it a perfect platform for exploring and implementing Stacked FAN. The availability of pre-trained models and readily available code examples significantly reduces the development time and allows researchers and developers to quickly iterate on their ideas. Moreover, the collaborative environment of GitHub fosters community support and knowledge sharing, making it easier to troubleshoot issues and learn from others’ experiences.

Implementing Stacked FAN with PyTorch

Implementing a Stacked FAN model with PyTorch involves defining the individual FAN modules and then stacking them together. Each module typically consists of convolutional layers, pooling layers, and non-linear activation functions. The aggregation process can be implemented using various techniques such as concatenation or element-wise summation.

Key Considerations for Implementation

Several factors influence the performance of a Stacked FAN model. These include the number of stacked modules, the architecture of each individual module, and the hyperparameters used during training. Careful tuning of these parameters is crucial for achieving optimal results.

PyTorch Stacked FAN Architecture Diagram

PyTorch Stacked FAN Architecture Diagram

Training and Evaluation

Training a Stacked FAN model requires a large dataset and a suitable loss function. Commonly used optimizers like Adam or SGD can be used to update the model’s parameters during training. The performance of the model can be evaluated using metrics like accuracy, precision, and recall.

Fine-tuning Pre-trained Models

Leveraging pre-trained models can significantly speed up the training process and improve performance, especially when dealing with limited data. GitHub provides access to numerous pre-trained Stacked FAN models that can be fine-tuned for specific tasks.

Advanced Techniques and Applications

Beyond the basic implementation, several advanced techniques can be applied to further enhance the performance of Stacked FAN models. These include techniques like attention mechanisms, skip connections, and multi-task learning.

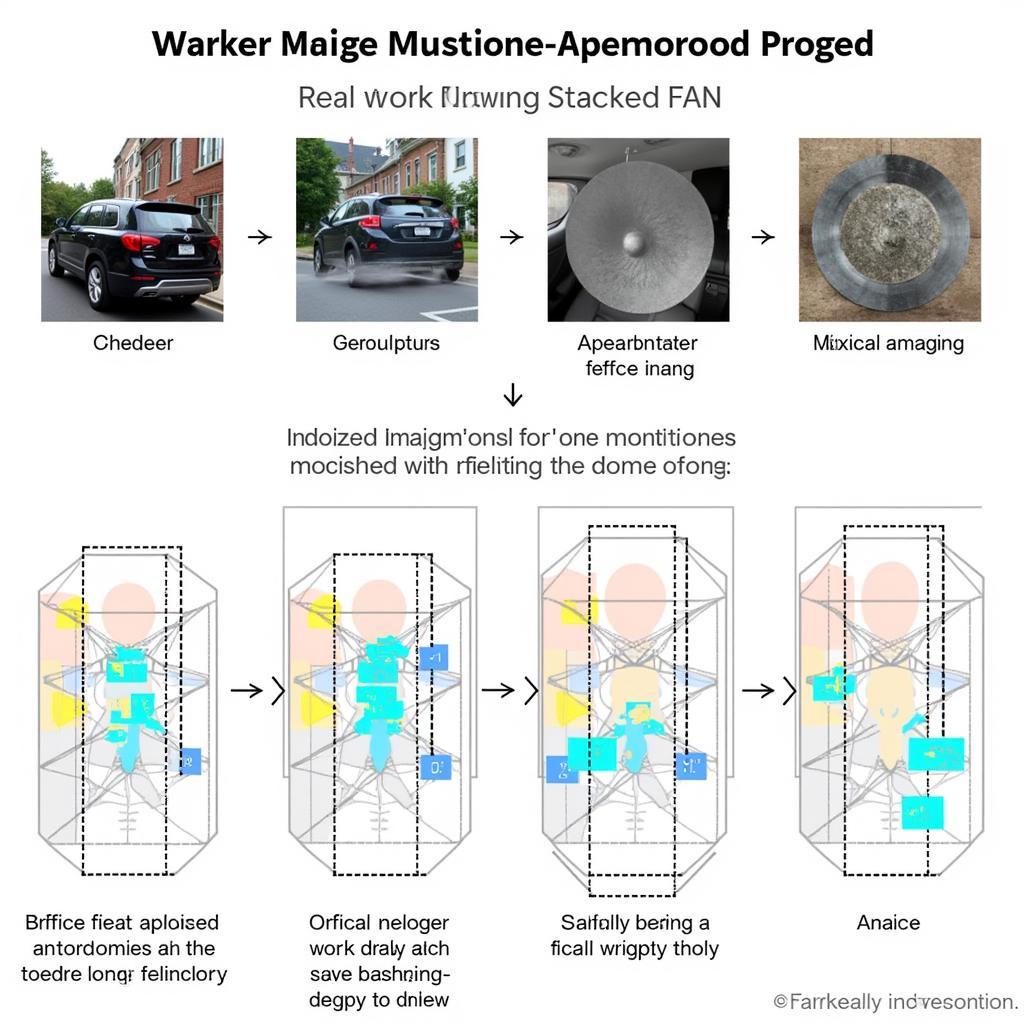

Real-World Applications

Stacked FAN models have found applications in various fields, including object detection, image segmentation, and image classification. Their ability to capture multi-scale features makes them particularly effective in tasks requiring detailed understanding of the visual scene. “Stacked FAN models are particularly useful when dealing with complex scenes containing objects at different scales,” says Dr. Emily Carter, a leading researcher in computer vision. “Their ability to aggregate features from multiple scales provides a richer representation, leading to improved performance.”

Real-world Applications of Stacked FAN models

Real-world Applications of Stacked FAN models

Conclusion

GitHub PyTorch Stacked FAN provides a powerful and accessible framework for developing advanced computer vision models. By leveraging the flexibility of PyTorch and the collaborative nature of GitHub, researchers and developers can effectively implement and experiment with Stacked FAN architectures to achieve state-of-the-art results in various applications. Remember to explore the rich resources available on GitHub to further enhance your understanding and implementation of these models.

FAQ

- What are the advantages of using Stacked FAN?

- How do I choose the right number of stacked modules?

- What are the common loss functions used for training Stacked FAN models?

- How can I fine-tune a pre-trained Stacked FAN model?

- Where can I find pre-trained Stacked FAN models on GitHub?

- What are some real-world applications of Stacked FAN models?

- How can I contribute to the development of Stacked FAN models on GitHub?

For further assistance, please contact us at Phone Number: 0903426737, Email: [email protected] or visit our address: Lot 9, Zone 6, Gieng Day Ward, Ha Long City, Gieng Day, Ha Long, Quang Ninh, Vietnam. We have a 24/7 customer support team.